Artificial Intelligence (AI) is rapidly redefining how we gather, interpret, and act on data. In global development and policy, this evolution is changing the face of evaluation the process of measuring human impact and understanding social change.

From predictive analytics to natural language processing, AI in Evaluation offers speed, scale, and precision that were unimaginable a decade ago. Yet, behind every algorithm lies a question: Can technology truly understand the depth of human experience, culture, and emotion that define real impact?

This blog explores seven powerful ways AI in Evaluation is improving human impact and the areas where it still struggles to grasp the complexity of human stories.

1. Automating Data Collection and Processing

AI tools now streamline how evaluators gather and clean massive amounts of data from surveys, social media, and sensors. Algorithms detect patterns and inconsistencies faster than any human analyst could.

For example, in large-scale health evaluations across Africa, AI can track vaccination trends or disease outbreaks in real-time. This speed enables faster interventions and more responsive policy design.

However, while automation saves time, it also risks missing contextual cues the local reasons why data behaves as it does. Numbers can tell us what happened, but not always why people made those choices.

2. Enhancing Predictive and Prescriptive Insights

Modern evaluation increasingly moves from descriptive (“what happened”) to predictive (“what could happen”). AI in Evaluation makes this leap possible.

Machine learning models forecast likely outcomes of interventions for example, which communities may drop out of education programs, or where social safety nets are most vulnerable to fraud.

These insights help donors and policymakers allocate resources more effectively. Yet, when training data lacks diversity as often happens in African or marginalized contexts AI predictions may amplify bias rather than correct it.

3. Natural Language Processing for Qualitative Analysis

Traditionally, analyzing interviews or focus group transcripts was slow, manual work. Today, AI-powered natural language processing (NLP) tools can analyze thousands of open-ended responses in minutes.

They detect themes, tone, and sentiment even subtle emotional shifts. This gives evaluators a new lens on human behavior.

But here’s the limitation: language is deeply cultural. Expressions of satisfaction, disappointment, or trust vary widely across regions and dialects. NLP engines often misinterpret African idioms or emotional nuance, showing why AI in Evaluation still needs human interpreters to provide cultural depth.

4. Improving Transparency and Accountability

AI systems can improve the credibility of evaluations through transparent data tracking. Blockchain-based evaluation systems, for instance, create verifiable audit trails of survey inputs, ensuring data integrity and trust between stakeholders.

This transparency helps donors see exactly how resources translate to outcomes, and it reduces data manipulation in politically sensitive programs.

Still, technology can only ensure process transparency not moral accountability. It cannot judge whether an evaluation question is ethically framed or whether community voices are respected in design.

5. Expanding Reach and Inclusion Through Digital Access

Mobile-based and voice-enabled survey systems powered by AI make it possible to reach remote or illiterate populations, even in areas with limited internet connectivity.

In rural Africa, where traditional surveys often exclude the most vulnerable, AI in Evaluation bridges inclusion gaps. Voice-to-text systems let respondents share views in local languages, expanding data diversity.

Yet, inclusion through technology is never absolute. Gender gaps in phone ownership, digital literacy, and power relations still shape who gets heard. AI may amplify the voices of the connected while silencing those without access.

6. Real-Time Learning and Adaptive Evaluation

Unlike traditional evaluations that happen after a project ends, AI in Evaluation supports adaptive learning continuous feedback loops during program implementation.

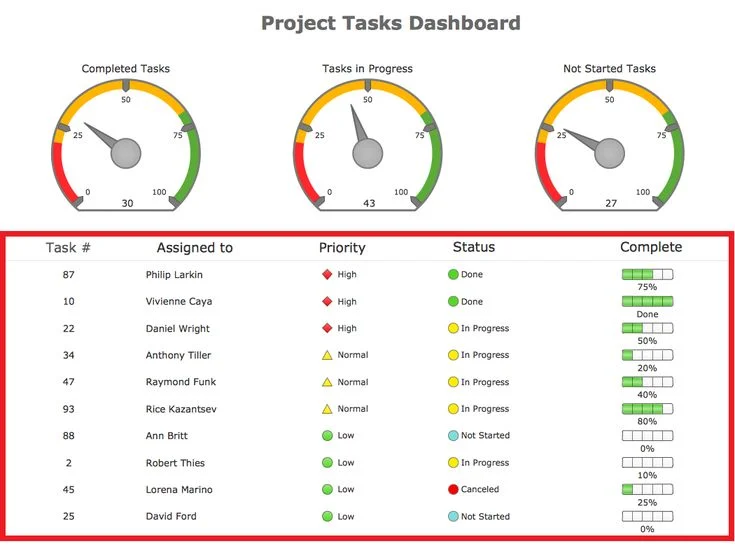

For instance, AI dashboards can flag early warning signals declining school attendance, slow cash transfers, or misinformation spikes enabling teams to adjust in real time.

This turns evaluation from a backward-looking exercise into a living, learning process. But adaptive systems are only as good as the data fed into them; poor data quality or incomplete reporting can lead to misguided decisions.

7. Human-AI Collaboration: The Future of Evaluation

The most powerful transformation lies not in replacing human evaluators but in augmenting them.

AI processes the scale of information that humans cannot. Humans, in turn, bring empathy, ethical reasoning, and cultural awareness that machines lack.

A balanced model where evaluators guide AI interpretation and AI enhance analytical power represents the real promise of AI in Evaluation.

However, this balance demands strong data governance, ethical frameworks, and capacity building, especially in the Global South. Without these, AI could deepen global inequities in how “impact” is defined and measured.

Where AI Still Falls Short

Despite its progress, AI still struggles to capture the why behind human behavior. Algorithms analyze outcomes but rarely understand emotions, context, or cultural meaning.

A dataset may show improved school attendance, but it won’t tell us how girls feel about education, or how social pressure affects their daily choices. That human texture is where impact truly lives.

Until evaluation frameworks center human experience as much as they value data efficiency, technology will remain a powerful but incomplete partner.

Conclusion

AI in Evaluation is neither a miracle solution nor a moral threat it is a tool. Its value depends on how we use it to advance fairness, empathy, and inclusiveness in understanding human impact.

As global evaluators, policymakers, and innovators, we must design systems that not only process data but also honor the people behind it.

Check us out!

At Insight & Social, we help organizations integrate AI in Evaluation responsibly combining data-driven insights with deep human understanding.

Partner with us to design evaluations that don’t just measure change but truly understand it.